ARC-PA 5th Edition Standards: Appendix 14G, Sufficiency and Effectiveness of Faculty and Staff

Appendix 14G looks at the sufficiency and effectiveness of your PA program’s principal and instructional faculty and staff. However, here’s a “warning” note. You still must develop, and explain, your own methodology about how you determine sufficiency. Therefore, the element about the complexity of the program itself must be addressed.

Appendix 14G expects the following elements on your SSR:

- Provide narrative describing the factors used to determine effectiveness of principal and instructional faculty in meeting the academic needs of enrolled students and managing the administrative responsibilities of the program

- Describe how the program collects data related to those factors to determine effectiveness of program faculty in meeting the program’s needs

- Provide narrative describing the factors used to determine effectiveness of administrative support staff in meeting the administrative responsibilities consistent with the organizational complexity and total enrollment of the program

- Describe how the program collects data regarding the effectiveness of administrative support staff in meeting the program needs

To respond, look at both the faculty and the student evaluation of Appendix 14G, to develop a thorough, 360-degree viewpoint.

Providing Benchmarks and Trends

A note about your benchmark numbers: Remember, benchmarks require a reason. For example, if you have a benchmark of 3.5, why is that? Do you want 80% approval ratings? Similarly, if your benchmark is 3.0, this implies you want 70% approval rates. The rationale for your benchmark needs to be within the program’s realm and failing to state that rationale could mean your SSR is inadequate. Anytime faculty does not meet benchmark, there must be narrative analysis of their disposition, which explains whether the individual received faculty development, or a plan to improve performance.

Also, whenever possible, you want to include trend data in your report. If you have a survey such as the following, and if you have surveys from your last rendition of 13I and 13L (from the previous Standards), you may be able to connect some of these questions together so you can have some trend data.

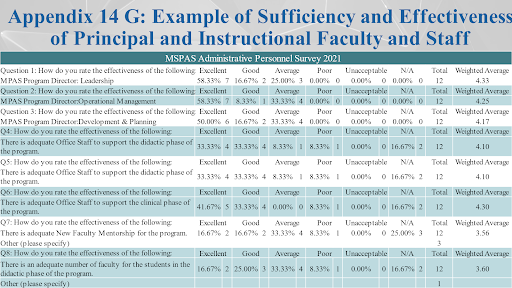

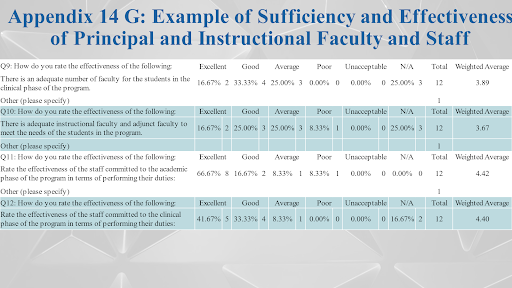

Following is part of a survey that I put together that addresses the elements of Appendix 14G. This is an example from a program that had some below-benchmark areas.

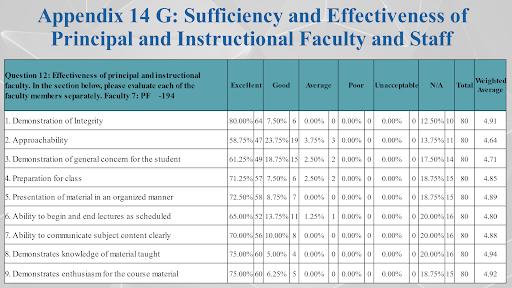

We’re used to gathering data on, for example, the effectiveness of a faculty member within a course. With the new Standards, I created a survey in which students evaluate each faculty member in a more global way. We ask, “How effective is this faculty member?” rather than just looking at the microcosm of a classroom. Here are the results:

This data can be collected at the end of the didactic year, or as part of the exit survey. I have found this data to be valuable to be able to provide a faculty member with an overall rating, rather than just looking at a course or an instructor evaluation. Obviously, those things still must happen, but an overall evaluation does provide another viewpoint. Interestingly, when I compare, students usually have a better perspective of evaluating a faculty member when they’re able to sit back and think about that person’s body of work. The chart above shows a weighted average; we don’t necessarily have to include all the questions in these instruments. Choosing to do so does provide more granularity to the information.

Accounting for COMPLEXITY

If you use only PAEA ratios to demonstrate sufficiency and effectiveness, the Commission tends to find the data inadequate. PAEA ratios are fine as one data source, but you must also account for the complexity of the program. What do we mean by complexity? I interpret it as the pedagogical model of the program along with the administrative responsibilities of the faculty.

Programs continue to struggle with getting workloads for committee work. One of the things that we’ve repeatedly discussed in this blog series is the sheer scope of assessment that needs to be done. In many cases, faculty take responsibility for assessment on top of their overall workload. If they’re not given some credit for that, it’s an issue and many times the work doesn’t get done.

Faculty and students may also evaluate administrative support staff for sufficiency and effectiveness as well. I like to break it down into asking if there is a perception of adequacy of the administrative support staff in the didactic phase as well as the clinical phase. Some programs struggle with lacking adequate administrative support staff for both phases of the program.

How many support persons do you need? That goes back to the organizational complexity. Certainly, you can look at the number of students you have. The larger the class size, the more of the faculty served by the staff members, the amount of time spent on admission activities, and so on, all lead to complexity.

The following two-part chart is a way of compartmentalizing some data. It shows the status of various members of the team.

Next Time…

In the next installment of the Massey Martin blog, we’ll look at the final section of Appendix 14. 14H is concerned with success in meeting the program’s goals, and we’ll examine how goals are presented on your program’s website and in your SSR.